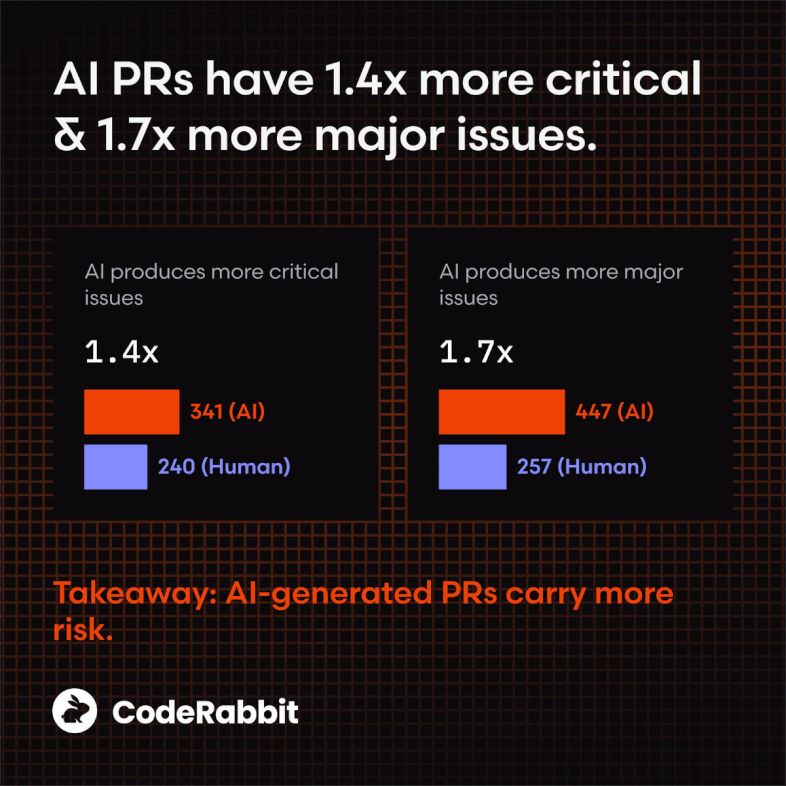

Researchers at CodeRabbit examined 470 pull requests from open-source projects on GitHub, including 350 generated with AI assistance and 150 written manually. Their analysis found that AI-generated contributions contained 1.7 times more significant defects and 1.4 times more critical issues than hand-written code. On average, each AI-generated pull request included 10.83 issues, compared to 6.45 in manually authored submissions.

Advertisеment

AI-generated code comes with higher rates across multiple problem categories. It contained 1.75 times more logical errors, 1.64 times more issues related to code quality and maintainability, 1.56 times more security vulnerabilities, and 1.41 times more performance problems. There are often specific security issues.

Specially, AI-written code was 1.88 times more likely to mishandle passwords, 1.91 times more likely to permit insecure object access, 2.74 times more likely to introduce cross-site scripting (XSS) vulnerabilities, and 1.82 times more likely to suffer from insecure data deserialization.

In contrast, human-written code showed higher rates of minor issues. Spelling errors occurred 1.76 times more frequently, and testing-related errors appeared 1.32 times more often in manually authored pull requests. You will find the research here.

Broader Trends in AI-Assisted Development

Additional studies support these findings and reveal further complexities in AI-assisted software development.

Higher Rejection Rates

A November study by Cortex reported that AI adoption led to a 20% increase in the average number of pull requests per developer compared to the previous year. However, the same period saw a 23.5% rise in the number of issues per pull request and a roughly 30% increase in rejection rates for proposed changes.

Maintainability

An August study from the University of Naples observed that AI-generated code tends to be simpler and more uniform in structure. Yet it often includes unused constructs and inline debugging statements. Hand-written code, while structurally more complex, presents greater maintainability challenges.

Actual Productivity

A July experiment conducted by the METR group found that AI assistants did not accelerate task completion. In fact, participants completed tasks more slowly when using AI tools, despite their belief that the technology improved their speed.

Support us

Winaero greatly relies on your support. You can help the site keep bringing you interesting and useful content and software by using these options: