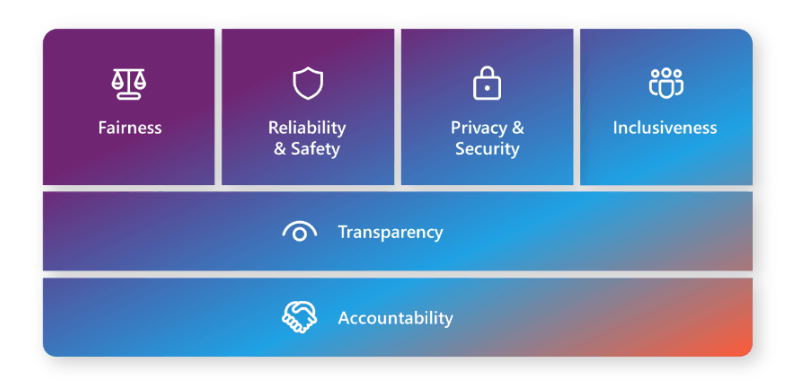

Microsoft has reaffirmed its commitment to developing AI features for Windows with a focus on responsible AI principles. These principles, outlined in the Microsoft Responsible AI standard, focus on fairness, trustworthiness, security, privacy, inclusion, and transparency. Microsoft ensures that its AI-powered tools not only enhance user productivity but also prioritize ethical considerations and user safety.

Advertisеment

Enhanced security and privacy for Recall

One of the standout features introduced for Copilot+ PCs is Recall, which allows users to retrieve past actions, documents, or content by describing them in natural language. Recognizing the sensitivity of this feature, Microsoft has implemented robust measures to ensure user control, transparency, and data protection.

Full user control

Recall is completely optional and allows users to manage it. Users can:

- Turn the feature on or off at any time.

- Pause saving screenshots if they prefer not to record certain actions.

- Set filters to exclude certain apps, websites, or actions from being captured.

- Control how long screenshots are stored and delete saved data whenever you want.

Transparency in Operation

Microsoft prioritizes clarity when it comes to how Recall works. During the initial Windows setup, users are clearly informed about the functionality of the feature and asked for permission to save screenshots. A system tray icon provides real-time visibility while taking screenshots, providing peace of mind. Additionally, when Recall first launches, the system prompts users to confirm their settings, ensuring that everything is as expected.

Security and Privacy Assurances

To protect sensitive information, Recall integrates multiple layers of security:

- Biometric authentication: This feature requires Windows Hello to be set up using facial recognition or fingerprint scanning to ensure that only authorized users can access stored data.

- Content exclusions: Screenshots are not saved when viewing DRM-protected content or browsing in incognito/privacy modes in supported browsers.

- Local data processing: All captured data remains on the user’s device, encrypted and processed in an isolated memory environment (e.g. VBS Enclave). This prevents unauthorized access and eliminates the risk of data being sent to external servers or third parties.

Ethical use of AI in Windows apps

Beyond Recall, Microsoft has integrated responsible AI practices into other innovative apps available on the platform. For example:

- Creative tools with AI enhancements

- The updated Paint and Photos apps now allow users to transform images into artistic styles or create entirely new visual effects based on text descriptions.

- A notable addition is the Restyle Image feature, which applies artistic effects while maintaining the integrity of human faces in the foreground. Advanced AI models identify and protect facial features, ensuring that changes are limited to the background and non-human objects. Importantly, no identifiable biometric data is collected, processed, or stored during this process, further protecting user privacy.

Responsibly designed local AI models

Microsoft has designed local AI models like Phi Silica with a focus on ethical use. These models include built-in content moderation tools to filter out harmful or inappropriate material, ensuring that the generated results meet high standards of appropriateness and safety. By keeping these models local, Microsoft avoids dependence on cloud processing, improving both performance and data security.

A Structured Approach to Responsible Innovation

Microsoft's approach to integrating AI into its products goes beyond individual features. The company follows a structured framework to guide planning and decision-making throughout the product lifecycle, enabling responsible innovation at every stage:

Governance : This foundational phase covers the entire lifecycle of an AI feature or product. It includes rigorous pre-release reviews to map, measure, and manage potential risks effectively.

Risk Mapping : Before development begins, Microsoft conducts thorough analyses to identify and assess risks associated with AI usage. This step ensures compliance with privacy, security, and ethical standards, helping mitigate potential harms early in the process.

Measurement : Comprehensive testing is performed to evaluate and address identified risks. Benchmarking and simulated “attacks” are carried out by specialized teams to stress-test the system and eliminate vulnerabilities.

Risk Management : After release, continuous monitoring and improvement are essential. Microsoft ensures resilience by evolving AI features over time, addressing emerging challenges, and incorporating user feedback to refine functionality.

You'll find the official announcement here.

Support us

Winaero greatly relies on your support. You can help the site keep bringing you interesting and useful content and software by using these options: