Google is about to run an experiment with built-in artificial intelligence in the Chrome browser. Currently, the company invited participants to join a closed test of embedding a large machine learning language model into Chrome.

Advertisеment

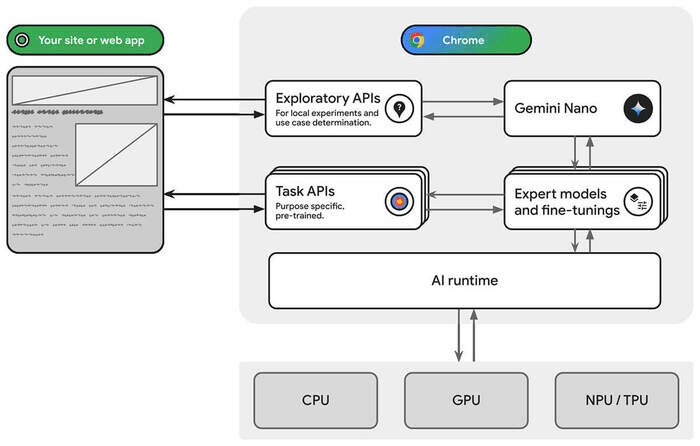

To access the AI model from web applications and browser extensions, the company offers the Prompt API, which allows you to send requests in natural language, similar to chatbots. It is assumed that a large language model built into the browser will simplify the execution of AI tasks in web applications and will eliminate the need to worry about installing and managing language models.

The runtime that executes the model automatically uses the GPU and NPU available in the system to speed up work with the model or switches to executing the model using the CPU. The advantages of executing the model on the user's system include maintaining privacy of the processed data, the ability to continue working in offline mode in the absence of a network connection or if there are problems with the quality of communication, reducing delays when sending requests, and eliminating dependence on external services.

The Prompt API currently allows interaction with the model that goes beyond basic single queries in natural language. It can do data processing and classification, considering the context of previous queries and data within the session. Additionally, the model can be utilized to identify optimal choices, such as selecting an appropriate icon from an emoji list for a specific comment on the website.

Google explained that the API is in active development and will be expanded and changed based on user feedback and preferences before the final version is adopted. In the future, Google will provide an Origin Trials add-on, which provides the ability to work with experimental APIs from applications downloaded from localhost or 127.0.0.1, or after registering and receiving a special token that is valid for a limited time for a specific site. At the same time, Google is collaborating with browser makers on standardizing the developing APIs.

Support us

Winaero greatly relies on your support. You can help the site keep bringing you interesting and useful content and software by using these options:

If I will start with AI, everything will go locally on my own hardware. Not that difficult, and if you have a powerful GPU, everything will work fine without a lot of extra expenses for subscriptions and other misery.

Unfortunately, most people will buy a service for that. I’m old and grumpy and live in a different world where I do most things myself!

Can’t say better!